The submarine cable industry’s current practices will sustain our future capacity requirements. We definitely have enough capacity to take us well into the future. Right?

Let’s take a closer look to find out.

Demand Side

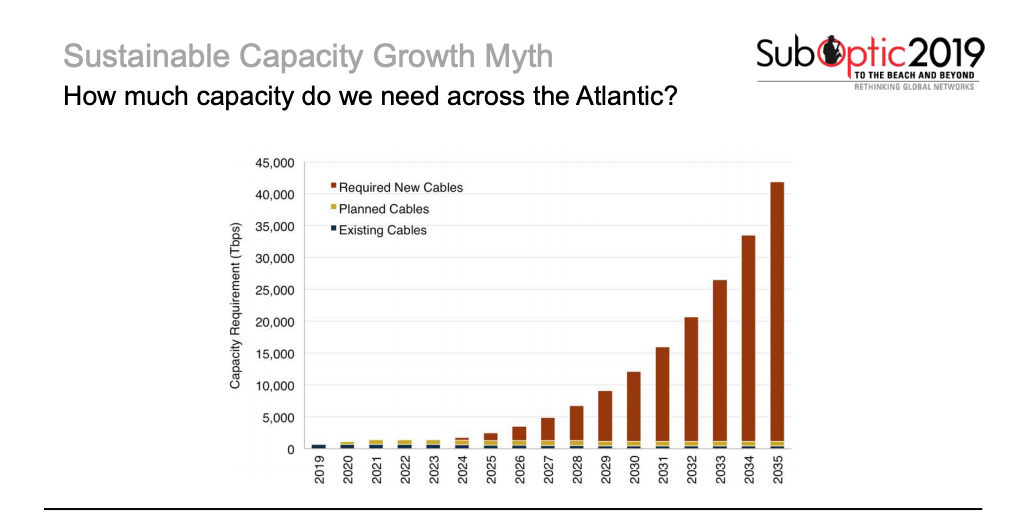

I selected the Atlantic—the most heavily used route—to better understand capacity requirements. How much capacity do we need to cross the Atlantic? How much will we need in the future?

At first glance, we see that our capacity needs are going up.

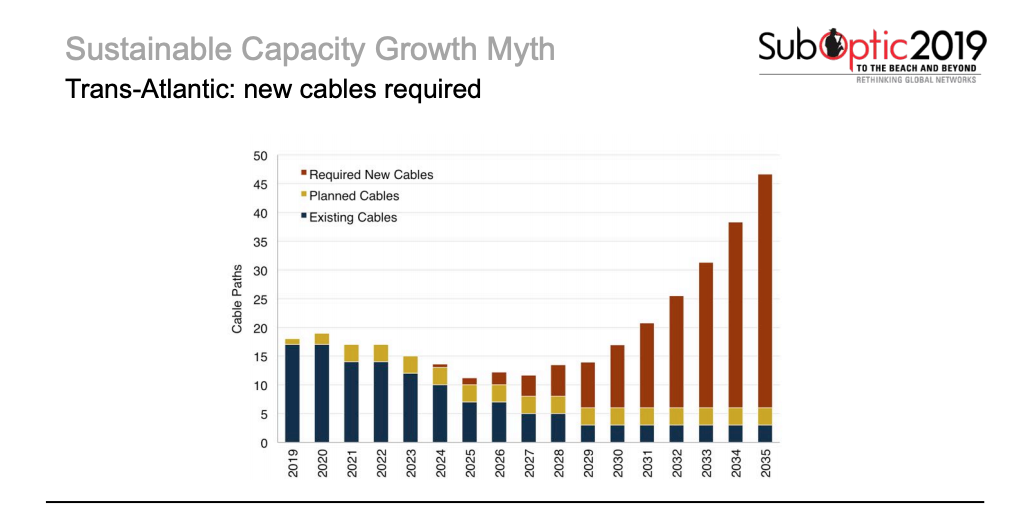

That’s all well and good, but what we specifically want to know is the number of cables that we’ll need in time.

While capacity is indeed growing, that rate of growth has been decelerating. Even with a conservative estimate of growth, it’s unclear if our forecasted amount of capacity is sustainable for content providers to support their customers.

Using YouTube as an example–a small portion of what Google carries—every second users upload 10 hours of new video content. If we do the math, it’s 80+ years of new videos every day. Google is not only storing that, but it’s crunching the numbers for a search index and distribution. That’s…a lot.

Supply Side

Let’s assume it’s possible that content providers get to a hypothetically lower level of demand growth. What about supply?

Again trying to connect growth numbers to an exact number of cables—and being generous in our assumptions—I’ll say that by 2023 we’ll achieve 640 Tbps per submarine cable. That’s about three times the max of what we our technology can currently handle.

Even more aggressively, I’m assuming that the industry gets to one-petabit (1,000 terabits) cables by 2025. Surely this massive step up in capacity will keep us from having to build many more cables to keep up with our forecasted capacity demand.

Even when you combine our conservative demand estimates with aggressive supply assumptions, we’re still looking at the need for 40+ new cables over the next 15 years. In the year 2035, alone, we’d need eight new cables across the Atlantic (!). And if my generous assumption that the industry achieves petabit cables in this timeframe doesn’t work out, we’re looking at even larger numbers.

This seems crazy, right? Possibly.

What are the Options?

The first option is for the industry to spend more. More investment in capital expenditure for laying cables—simple as that.

The second option is for the biggest users of bandwidth—content providers—to build more data centers closer to their end users rather than centralizing some of their bigger compute tasks in mega data centers.

The downside of this second option is that it costs money, too. A large data center can cost $500 million to $1 billion. With varying electricity costs, content providers lose the ability to locate their data centers in strategic locations with cheap power or favorable tax structures.

Our third option? Let’s hope for a technological breakthrough on the supply side or within a content provider’s ability to compress their data.

But there’s a cost here, too. Any technological breakthrough comes with an R&D cost, as well as an opportunity cost.

The final option is for big users to allow their network to degrade, ultimately hurting user experience. Content providers have said that they believe there is a connection between latency on their systems and ad revenues, making this scenario the least likely.

The Numbers

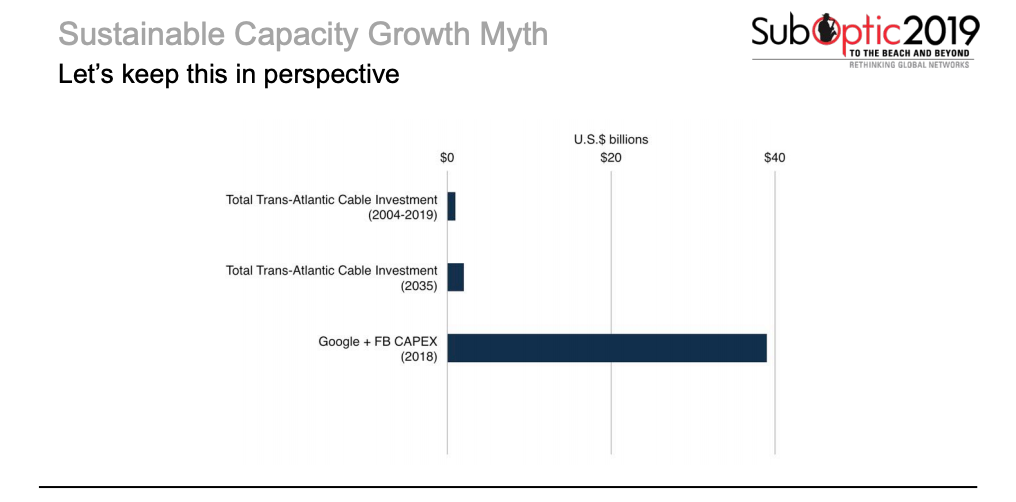

Total cable investment of the last 15 years (less than $1 billion) measured against our forecasted needs for 2035 would require us to more than double this investment. That’s huge.

But there’s more to consider.

The investment from Facebook and Google alone last year was also huge—$39 billion—with much of this investment going to data centers. The subsea industry might think of itself as masters of the sea, but it’s actually a servant of the data center industry.

The Verdict

Something’s gotta give. The industry is absolutely going to need more cables in the future.

Knowing what we do about content provider demand—and that user experience is tied to their massive ad revenue—it doesn’t seem outlandish to predict that we’ll see more cables from Google, Facebook, Microsoft, and the like. Perhaps they’ll also spend money devising new schemes to reduce their traffic.

But the notion that our current practices will sustain our future capacity requirements? That myth is busted.

Tim Stronge

Tim Stronge is Chief Research Officer at TeleGeography. His responsibilities span across many of our research practices including network infrastructure, bandwidth demand modeling, cross-border traffic flows, and telecom services pricing.